How about a reasonably complete scanned archive of BYTE magazine?

Category Archives: Geek

Heh. “Horse Pocket.”

This is some high-quality Lego nerdery right here

This surprises exactly no one

Do you like watches? I like watches.

Before I was absorbed into Apple Watch Borg, I was a longtime devotee of mechanical wristwatches. There’s something beautiful about them; a mechanical movement is the culmination of tech first used hundreds of years ago. A clockmaker from the 17th century could look inside my Omega and recognize the techniques even if he wouldn’t be able to understand how we got the device so small. It’s a lovely, lovely thing.

But most people have no real idea how they work beyond “uh, I wind it and it keeps time.” I sure hope you are just as delighted as I was when you look over, and hopefully read, this spectacular explainer about how mechanical watches work. It demystifies words like “escapement,” and details clearly, from a very simple and easily understood baseline, how a watch works.

Make time. This is what the web is FOR.

(As a bonus, allow me to point out some other topics the author has given the same treatment — it’s amazing. Gears! Cameras and lenses! GPS! The internal combustion engine! It’s deep-dive paradise! Creating these beautiful, interactive pieces is a hobby for the author, but that doesn’t mean you shouldn’t consider becoming a patron anyway. It’s beautiful work, well done, and authoritative, that helps explain the complex topics around us. In this, he makes the world a better place.)

Dept of PERFECT things for GenX Kids

The Saturday Afternoon Ikea Trip Simulator is a text adventure about Ikea. Enjoy.

Time, man.

Hard to believe, but Steve Jobs died 10 years ago yesterday.

What do we say to the god of death?

PROTIP: Before you start mucking with your .emacs….

![]()

Scunthorpe in reverse

In software development, there’s a thing called the Scunthorpe Problem. It’s not some weird, arcane topic about memory allocation; it’s actually bone simple. The central question is: “How do you police text inputs to keep naughty words out?”

The naive answer is simple string matching, so you’d check to see if the input contained any of a set of rude words.

The problem then crops up, because there are nontrivial legitimate occurrences of a number of those sequences of letters, and dumb rude-word-police algorithms that just check for “contains” will kick out all sorts of perfectly reasonable inputs. This leads to a number of annoyed customers, not the least of which would be the denizens of a quaint village in Lincolnshire. Or men named “Dick,” say. (The first link in this post includes a list some infamous examples, including blocked searches for “Superbowl XXX” (because “XXX” means porn), or the blocked domain registration for shittakemushrooms.com, etc.)

So you have to be smarter.

But you can also turn this around, and that’s what this post is about. If you’re manipulating user names to create something unique, you should be cautious about your recipe, and you probably SHOULD use a fairly dumb string filter to alert you if your proposed scheme results in unfortunate combinations.

To Wit: Our customer base at work is comprised of largely big government contracting firms, and in this pond there is near-constant merger/acquisition/spin-out behavior. This leads, inevitably, to changes in people’s email addresses. MOST of the time, this is no big deal: Joe.Blow@CompanyA.com becomes Joe.Blow@CompanyB.com.

However, in one such case happening now, the new company has shifted to first-initial-last-name for emails — from Joe.Blow to jblow, for example. This SEEMS innocuous, until you come to the case of my client contact named something like Steve Hittman.

I mean, it could be something else. It could be Francis Uckley. Or Charles Unter. But you get the idea. (It is not any of these, but it’s just as bad.)

One wonders when the Exchange administrator at the new firm will notice, and what — if anything — they do about it.

“32-bit unsigned integer ought to be big enough for anyway”

Except, well, Berkshire Hathaway.

Warren Buffett’s company has eschewed stock splits forEVER, and as of now it trades at an eye-popping $429,172.43 per share. (The next priciest issue is around $5k.)

My Nerds are with me already, but for those of you not in the tribe:

You’ve probably noticed some numbers show up as “limits” in computing. One common one is 255. Lots of data input fields, for example, limit you to 255 characters. You may or may not have ever wondered why, but I’m gonna tell you anyway: Remember that, at the end of the day, computers are powered by tiny tiny circuits, and at that level everything is either “on” or “off.” That’s binary. With a bit of handwaving, you can see how the limit of number storage for a given variable type would be tied directly to how much memory is set aside to store that variable type, and the limit can always be expressed as a power of 2 (because with binary, there are 2 states: on or off).

Two to the 8th power is 256. That number takes 8 bits to store.

Now, back to stocks.

NASDAQ, on which Berkshire trades, used a 32-bit integer data type to store stock prices. A regular 32-bit int has a range that’s centered on 0, but since stocks can’t have a negative price they used the unsigned version. 2 to the 32nd power is 4,294,967,296.

NASDAQ, sensibly, reserves the 4 rightmost digits for the fraction, so the largest stock price they can accommodate is $429,496.7294 per share — a value the Berkshire is fast approaching. NASDAQ is responding by rushing out a fix, but I think we all know how well rushed fixes go.

(If you’re thinking “wait, isn’t this kind of the same thing as the Y2K problem?”, well, you’re not entirely wrong. But given that Berkshire is SUPER weird in refusing to split, and that the next largest issue is two orders of magnitude away from the limit, I’m inclined to give the NASDAQ designers a pass here. Odds are, they’ve known this was coming for a while; my sense is that probably some of them wondered if Buffett would die first and be followed by someone less split-averse.)

How Microsoft is Sucking Today

I have a LOT of stuff in Dropbox. It’s a great tool, and it’s fairly priced, and as such I’ve been using it across Mac, Windows, iOS and now Linux for a decade. It’s great.

However, I don’t always want EVERYTHING. I’ve realized that while this is great for iOS access, or for keeping my main working files synced across my main, backup, and Windows laptops, it’s a bad fit for temporary working files that I may want across multiple VMs within our virtual environments, so I turned on OneDrive for this.

I figured I’d just pull in files for ClientX and ClientY, because that’s what I’m working with right now. (DropBox HAS selective sync, but i have so much stuff in mine it’s easier to just manage this in parallel.)

Well. Now’s when I remember that MSFT can’t really do ANYTHING that isn’t fundamentally weird or broken or otherwise infected with terrible ideas from marketing. And here’s the example:

OneDrive assumes you want to include your Desktop, Documents, Pictures, and a few other things in your OneDrive sync automatically, and will not allow you to opt out because these folders are “special.” WTF, right?

I didn’t notice this until my CustomerX environment suddenly had a desktop full of other random working files, which was unwelcome. I tried to disable Desktop sync, but was stiffarmed, so I went back to the “master” virtual machine and tired to disable it there. Nope, same message: Desktop is “special” and can’t be unselected.

What I COULD do was unselect everything WITHIN Desktop, so I did that.

And then all the files in my master desktop disappeared.

After some poking around, it turns out OneDrive just took fucking CONTROL and moved all that data into the special OneDrive Desktop. Why? Fuck you, that’s why. Same with Documents, etc. It’s like transparency and predictability are COMPLETELY UNKNOWN in Redmond. Seriously, how did this kind of behavior get past beta?

Dropbox doesn’t do shit like this. You tell it what folder to sync, and go from there. What’s wrong with that approach? I guess, it’s just insufficiently invasive and weird.

It’s not just about OneDrive, though. I spend a LOT of time in virtual meetings. We use GoToMeeting, but we also end up joining client meetings set up in WebEx or Teams or Skype or even Zoom — basically whatever they have, if they want to set up the meeting. (We VASTLY prefer GTM, because it works way better, so mostly it’s that, but sometimes you gotta go to their house, so to speak.)

The new version of Teams is installed on my Windows environments, but, turns out, you cannot use it to join meetings set up by organizations you’re not a member of on Windows or MacOS. The only supported way to join on those systems is to use Edge or Chrome.

WAT.

I don’t have Chrome installed anywhere, so this means it’s impossible to join Teams meetings with customers from my Mac at all. (I use my iPad.)

Again: What the fuck were they thinking here? No other meeting platform is so goofy and limited. Even Skype worked better.

I swear, MSFT has been awful way more often than they’ve been good for my entire career, and that’s nearly as long as MSFT has existed.

What keeps software people up at night

This Atlantic piece has a pretty alarming title — The Coming Software Apocalypse — but, well, it’s not entirely wrong.

Thirty years ago, we wrote (mostly) to the bare metal. The whole system was plausibly knowable. Now, software is built on software that’s built on software; it’s turtles all the way down, and it’s impossible to understand the entirety of ANY modern effort — because even if you have perfect knowledge of YOUR code (or your organization’s code), you’re dependent on libraries and systems running below you that are opaque.

If all this was just about controlling your VCR or your favorite Office app, it might not matter as much, but we are insanely cavalier about software quality in places where lives are at stake — in 911 systems, in cars, and especially in avionics. But think also about power plants, or other critical areas of infrastructure. Software quality (avoidance of bugs, from the benign to the catastrophic) and software security (keeping others from exploiting the code) are quite often afterthoughts, if they’re thought of AT ALL.

(Incidentally, this is why most software people stay far, far away from “internet of things” gadgets controlled via apps and the cloud. They’re AWFUL from a security POV. And so is your car, most likely. And so is your so-called SmartTV. At our house, the Samsung isn’t even on the network — we use it as a dumb display panel, because we do not, and should not, trust Samsung’s code.)

The piece goes into some ways we might be able to ameliorate this in the future, and some of the steps are very technical and some honestly involve a bit of magical thinking. But a key aspect is taking these things seriously from the getgo, and not being cavalier about any of them (as, say, Jeep and Toyota have been).

F*ing Microsoft

Scientists renamed human genes to stop Microsoft Excel from misreading them as dates.

I swear to god, the most common thing said to an Office product MUST be “goddammit STOP HELPING ME.”

The S definitely does not stand for “super”

Somehow I missed it — well, not somehow; I know how — but about 2 years ago, Microsoft introduced something they call “Window S Mode” on new non-professional editions of Windows.

If you’re in S mode, you literally cannot install any software that doesn’t come from the (hamstrung, poorly populated, very limited) Microsoft Store. I only ran into this today because we’re trying to get a new consultant up and running on the double, so we dropshipped a consumer grade laptop to him when Dell couldn’t get him a “real” one before December (about which: WTF?), and it came with Windows Home.

The new guy needed some help getting software installed, and so as is our usual approach I got him on a GoToMeeting session intending to use the meeting’s remote control features to get things rolling quickly. Except now GTM kinda of biases its web app, which doesn’t allow remote control.

No problem; I’m used to walking folks through switching to the desktop app, which does support remote control.

Except the steps that usually result in downloading and running the GTM desktop installer kept shunting him into the Windows Store, in which there is no GTM app. WTF?

Oh yeah. S mode.

As an aside, let me say this: I know what they’re doing here. S Mode is an attempt to copy what Apple did several years ago. New Macs ship with an option set so that they’ll only run software from the Mac App Store. Superficially, this is the same, except:

On a Mac, changing the setting is dead easy; it’s just an option in the Mac version of the Control Panel. On Windows, you have to follow a much more complex path that requires you to have a sign-in with Microsoft.

On a Mac, you can just turn this setting back the other way any time you want. On Windows, it’s a one-way change. You can’t return to S mode later.

So even when Redmond copies, they fuck it up. I mean, I’m supportive of machines coming configured more for Aunt Millie than the likes of me, but the pathway out of this more limited mode has to be much, much more transparent and simple. Don’t get in my fucking way and tell me it’s for my own good. And for the love of Christ don’t do it on a computer where adults are trying to work.

Basic CD Drive Maintenance

How to remove ham from your disk drive.

Truly, God’s work.

Today in niche but huge news

This is a sentence that will make zero sense to most people, and cause jaws to drop for those that understand it: Microsoft will purchase Bethesda for north of $7B.

This is the biggest game deal ever. It means MSFT will now own top-tier properties like Fallout.

Lindsey Graham and Tom Cotton want to destroy the Internet

The new bill, called the Lawful Access to Encrypted Data Act, essentially outlaws end to end encryption that does not feature a back door, which means it outlaws any secure encryption at all.

It is not possible to create a secure encryption scheme that includes a back door. The existence of the back door means the existence of some sort of master key that will inevitably be leaked and misused. Insisting “but we’ll require a warrant” is cold comfort in light of that, and never mind that the whole warrant process for surveillance has been shown repeatedly to be rife with abuse itself.

This isn’t just about encrypted communication in WhatsApp. This touches every financial transaction online — every payroll deposit, every mortgage payment, every credit card charge. All of these things use secure encryption. And all of them will be made materially weaker and far, far easy to compromise by this bill.

Ars lays it out:

Encryption doesn’t work that way

Providing the sort of backdoor Graham and company keep asking for means, among other things, providing the service provider itself access to “encrypted” data. This, in turn, opens that provider’s customers up to privacy violations from the service provider—or rogue employees of the service provider—themselves, which in turn would break much of the security model of modern cloud services. This would gravely impact not only end consumer privacy but enterprise business security as well.

In recent years, large cloud providers such as Amazon, Microsoft, and Google have made big and successful pushes to convince large businesses to host increasingly confidential business data in their data centers. This is only feasible because of secure encryption using keys inaccessible to the cloud provider itself. Without provider-opaque encryption, those businesses would return to storing critically confidential data only in self-managed and controlled private data centers—increasing cost and decreasing scalability for those businesses.

This, of course, only scratches the surface of the true impact of such a misguided effort. Secure encryption is an already widely available technology, and it doesn’t require massive infrastructure to implement. There is no reason to assume that the very terrorists Graham, Cotton, and Blackburn invoke wouldn’t simply revert to privately managed software without holes poked in it were such a bill to pass.

There’s also no reason to assume that the service providers themselves would be the only ones able to access the critical loopholes LAEDA would require. It’s difficult to imagine that such vulnerabilities would not rapidly become widely known and be exploited by garden-variety criminals, foreign and domestic business espionage units, and foreign nations.

The advocacy group Fight for the Future issued the following statement (also in the Ars article):

Politicians who don’t understand how technology works need to stop introducing legislation like this. It’s embarrassing at this point. Encryption protects our hospitals, airports, and the water treatment facilities our children drink from. Security experts have warned over and over again that weakening encryption or installing back doors will make everyone less safe, not more safe. Full stop. Lawmakers need to reject the Lawful Access to Encrypted Data act along with the EARN IT act. These bills would enable mass government surveillance while doing nothing to make children, or anyone else, any safer.

At last! An easy way to quit vi!

(for my nerds only)

VI(M) is hard, and sometimes we need to take drastic measures. We understand your needs. Maybe you’re new on the job, and you forgot to set your default text editor to nano, emacs, gedit, whatever. VIM pops up and now you have to make a choice…

Dept. of Examples of How Windows Sucks

There are certain things you can’t name a file in Windows 10 due to design choices from MS-DOS.

Now, he tries to soften the suck here, noting the degree to which MSFT does things like this to ensure backwards compatibility. Bollocks. There’s no reason to continue this shit, especially when it comes (as it does) at the expense of modern function and stability — or correctness.

It’s that last bit that really points out MSFT’s ridiculousness here. 30 years ago, when Excel was first introduced, there was a well-known bug in Lotus 1-2-3 (the prior spreadsheet king); Lotus treated 1900 as a leap year, which is was NOT. Even so, 2/29/1900 was a valid date.

And Microsoft broke Excel to mirror the behavior, and continues to “honor” this bullshit to this day — in fact, the bug is a part of the requirements for the Open Office standard as a result.

(Don’t @ me about leap years. No, it’s not just every 4 years. It’s ever 4 years UNLESS it’s a century year that is NOT divisible by 400. This is why, for your whole life, every-4-years works — because 2000 was an exception to an exception that only comes around every 400 years.)

RADM Grace Hopper, on Letterman

Somehow, I had never seen this. It’s wonderful. She had just recently retired — as, at the time, a Commodore, which was eventually renamed Rear Admiral (Lower Half) for complicated Navy reasons — but you can see here she’s just as quick as a whip even with someone like Letterman. She even gives him a nanosecond.

I’ve posted here before about her; she is a giant and without her, modern computing would be very, very different. Hers is the only grave I’ve sought out at Arlington. As they said of Wren,

LECTOR SI MONUMENTUM REQUIRIS CIRCUMSPICE

Stop letting stupid companies write software

Turns out, if you have or leased or rented a Ford, and you paired your phone to it via their FordPass app, odds are you can still control that car.

Dept. of Nerd History

Old computer geeks like me may enjoy this history of the rise and fall of OS/2 over at ArsTechnica.

“This is happening without us!”

Paul Allen died on Sunday.

It’s possible you don’t know, or don’t quite remember who he was, even if you’re nerdy enough to read this site. Allen was Bill Gates’ partner in founding Microsoft back in 1975.

Gates writes, in his remembrance of Allen:

In fact, Microsoft would never have happened without Paul. In December 1974, he and I were both living in the Boston area—he was working, and I was going to college. One day he came and got me, insisting that I rush over to a nearby newsstand with him. When we arrived, he showed me the cover of the January issue of Popular Electronics. It featured a new computer called the Altair 8800, which ran on a powerful new chip. Paul looked at me and said: “This is happening without us!” That moment marked the end of my college career and the beginning of our new company, Microsoft. It happened because of Paul.

This wonderful world of computing where I’ve made my life (*) since the early 80s was built by men like Allen and Gates and Jobs and Wozniak and others whose names you don’t know, like Dennis Ritchie. I’ve joked for years that these guys were the only Boomers I actually liked, and it’s not far from the truth. (Well, Ritchie was older than that, but still.)

Computing was always a young man’s game for most of my life, but all those young men are getting older, and now, well, they’re shuffling off this mortal coil. Sic transit gloria mundi.

(*: My whole life. My career. My education. A huge chunk of my social life. And yes, even Erin; longtime Heathen will recall it was a blast email from a mutual friend that reconnected us 17 years ago this summer.)

Death to the Bullshit Web

Have you noticed that, even though your computer is insanely fast, and your connection is faster still, that web pages don’t seem to load any faster in 2018 than they did in 2008 or 1998?

Yeah. Me, too.

That’s the bullshit web.

Clever Girl(s)

I’m sure that we’re totally safe from robots, unless they figure out how to open doors.

Well, about that….

“There is really no way to know for sure whether the physical universe also uses invisible squirrel timers.”

Heh.

No, Tom, I will not play this with you.

I am certain that it is not exceptional for a game to exist, but be rarely played.

I am, however, reasonably certain that The Campaign for North Africa is perhaps the only game that has never, ever been completed, not even once, by people who are not clinically insane.

You remember those “bookcase games” published in the 1970s and 1980s, from companies like Avalon-Hill and the like? These are a long way from Monopoly; they’re intricate and complex and intended for adult players or very enthusiastic teens; many take multiple sittings to complete, even at an hour or two per session. Some people like this sort of thing very much, even today, in this era of simple iPhone games.

CNA is the apotheosis of that genre, and may also be its nadir. It is so unbelievably detailed as to be, more or less, unplayable. For example:

- It ships with 1,800 counter chits

- The map can cover multiple normal-sized tables

- The rulebook comes in three volumes

- Gameplay is absurdly detailed, even down to managing individual planes and pilots in a campaign-level simulation

This complexity, of course, comes at a tremendous cost: A full game of CNA will take an estimated 1,500 hours, and requires 10 people. To put that in perspective, a 40-hour-a-week job takes about 2,000 hours per year.

iO9 has more, and there’s a MeFi thread as well.

Yet another reason to eschew Bose

A Chicago man is suing Bose, alleging his wireless, noise-canceling headphones are also sending information about his listening habits to a Bose partner called Segment.io via the companion smartphone app.

This kind of thing is simple to check, so it’s virtually certain that the allegation is true (especially since the man has engaged a respected law firm).

There remains no easy way for consumers to control phone-home behavior from apps or “internet of things” devices, because it’s all too new. Nerds like me can do it, but it’s still not simple, and it should be. In computer security, folks often try to pare down any given user or process’ permissions to the barest minimum required to do the task at hand; if more folks were able to apply that to bullshit like headphone companion apps and smart light switches, we’d all be better off.

Of course, the other takeaway is this: your fucking headphones shouldn’t need a goddamn app. That’s absurd. If you find your headphones have come with an app, RETURN THEM, because something dodgy is probably going on.

Windows 10 overrides user choice. This is bad.

Over at the EFF, they’ve got a good rundown of the problem.

Falsehoods Programmers Believe About Names

No, really, go read this.

How the Amiga and Commodore died

I’m not sure I’ve ever seen it all laid out quite this clearly before. If you lived through the era this is worth your time.

Is this the right room for an argument?

Best Dragoncon Group Cosplay EVER.

At DC, it’s all one story

This is a pretty great rundown of the history of continuity changes in the DC comics universe.

Non-nerds may wonder what that sentence means, so I’ll take a swing at a quickie explanation. “Continuity” in superhero comics refers to the overarching story. Each issue isn’t self-contained; they reference prior issues, and not just last month’s. Batman remembers fighting the Joker a year ago and ten years ago, and so forth. He knows he’s been friends with Superman for much of his life. These are just facts in the DC world.

Of course, then you have a problem, because both of those heroes started fighting crime nearly eight decades ago, and yet both are frozen in the prime of life despite having literally decades of experience in their roles — and the storytelling burden of a new issue every month.

(It’s worth nothing that the only other form of storytelling that deals with such long-term continuously published continuity is the soap opera, but there, at least, you’re tied to reality because the actors age in real time.)

Comics address this with two main tools:

First, there’s something called a “ret-con,” which is short for “retroactive continuity.” When this happens, some prior fact in a story is changed, but without upending the whole world. Minor retcons happen all the time; a great “mainstream” example is flashbacks in The Simpsons, since they’re frozen in time in a 20+ year show. When the show started, a memory sequence from 20 years before would unequivocally place Homer in the 1970s, but more modern episodes shift his earlier life forward, right? That’s the kind of retcon.

The other one is the reboot, where massive amounts of prior story and history is jettisoned in favor of a blank-slate renewal with only certain base facts retained. That’s what the linked story is about. DC — the comics company behind Superman and Batman — started in the 1930s, and told stories of a bunch of heroes in addition to Superman, Batman, and Wonder Woman. But in the postwar years, interest in hero comics cratered and nearly all those hero titles (except the big three) were cancelled until a revival in the 1950s. The revival, though, fundamentally changed many of the characters — the original Flash wore a tin hat, for example, but the version resurrected in the 1950s is the one you probably think of when someone says “The Flash”; he’s Barry Allen in a red suit with a cowl and a lightning motif. (In comics, the original era is referred to as the Golden Age, and the 1950s revival is the Silver Age.)

Another excellent example of a reboot is what Abrams did with the 2009 Star Trek film. We see the same characters, and the same ship, but we’re telling new stories with them. The Chris Pine version of Kirk has never met Harvey Mudd, never seen a tribble, and so forth. The inclusion of “regular” Spock in the film gives us a link to the “normal” Trek universe, but it’s otherwise distinct, with its own threads of story and history to unfold, unencumbered by any of the history that’s been piling up since the original series aired.

Anyway, the storytelling problems that surface in comics are unique to the form (though kin to those faced by any long-running narrative universe, including soaps and certain long-running franchises like Star Trek and Doctor Who). This article is a fun exploration of how DC has addressed them in the last eighty years.

Dept. of Old Nerds

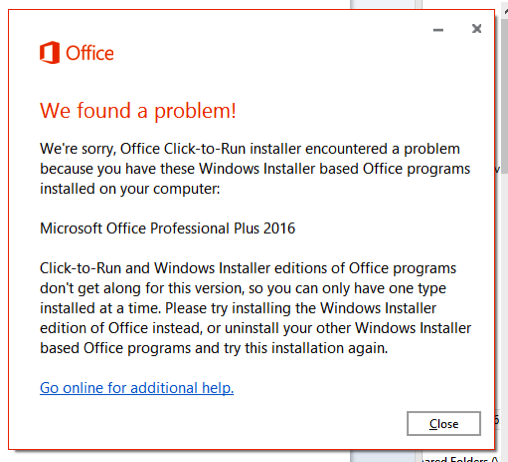

Microsoft: Still failing

I’m installing Project, after having installed Office. I got this error. How on earth is this even a thing that you let happen?

I mean, seriously. I’m a giant nerd with 25 years of experience, and I can barely parse what the hell they’re talking about. How exactly is a normal human supposed to respond to this?

Jesus X. Christ.

Got stung by the unwanted Win10 upgrade? Maybe you should sue.

This woman did, and MSFT folded to the tune of TEN GRAND.

Dept. of Updates, Comic Shop Division

Right, so, there have been some Developments.

When I posted my previous entry about Bedrock, I also tweeted it at them hoping to get some kind of reaction. Sure enough, a couple days later, I got a message on Facebook from the manager of the Washington Avenue store. To call it an abject apology would be to understate things by several orders of magnitude. John Scalzi has, somewhat famously, attempted to quantify what makes a good apology, and the Bedrock manager hit all the right notes.

In part:

I made a mistake, plain and simple. There is no store policy or company policy about having a phone number for having a box, it is just a requirement that I prefer we have. I like being able to reach people directly concerning any issues with the box. I set your form off to the side, again my decision, making a terrible assumption about when you would next be in the store. I incorrectly assumed you would be in the next week or the week after, I would then get the phone number and then enter the information in our system and get everything started. This, obviously, did not happen. I read your review on your blog and your anger is well justified. I should have reached out to you via email. Especially considering I deemed the phone number so important, but, in all honesty, did not even consider that. Again, this is all my fault and my responsibility, not an indictment of Bedrock City comics.

He doesn’t stutter or prevaricate; he takes full responsibility, and then comes the kicker: if I’d give them another shot, they’ll supply any issues I missed on their dime, even if they have to go to other dealers to do it.

Yeah, I can do that.

I had a bit of business travel last week, but yesterday Mrs Heathen and I went into Bedrock again and met with this manager. We gathered what they had of my pulls in house, plus another trade or two, and took inventory of what I needed that they didn’t have yet. Turns out I haven’t missed many issues after all, which is nice. Nicer still, the missing ones are from big-print-run Marvel books, so finding them will be trivial for Bedrock. (I should have them later this week, actually.) We started over, more or less, and I left there feeling good about the shop and about Eric the manager in particular, which is a long way from where I was on May 25. He didn’t have to reach out to me at all; that took actual guts and integrity, and he deserves praise for having the stones to do it. Moreover, they certainly didn’t have to comp my entire pile yesterday, which rang to the tune of $40 or $50. But they did, to make up for the hassle, and that’s how you recover when you fuck up.

That’s the lesson here, really. Every business will make mistakes, even good ones run with the best of intentions. The trick is all in how you recover, and Bedrock (and Eric) nailed the recovery in a way that’s really only happened to me one other time.

Nearly two decades ago, something similar happened to me with a car detailing shop: they lost my car key, and since it was a Porsche I’d bought used, it was the only one I had. Before I could even say a word, though, they were outlining how they’d fix it. Obviously, they’d pay for my replacement key. But also I’d have the use of the owner’s truck for the duration. They’d guard my car — it couldn’t be moved or locked without the key — until a key showed up from Porsche America, which turned out to be about 72 hours. And after it was all over, they’d clean up the various rock dings on the front air dam of the car for free, which I never would’ve done because it was about $500 worth of work. Ask me now: have I ever used another detailing shop? Nope.

It’s early yet, but right about now my bet’s that I’ll be buying comics at Bedrock for a long time, too.

Why are comics shops in trouble?

THERE’S AN UPDATE. SCROLL UP. HERE’S THE LINK.

It’s not so much online, or other demands on our attention. It’s because of gatekeeping behavior, I’m sure in part, but in my personal experience, the biggest single reason?

They are bad at their jobs.

Let me explain.

Comics are a periodical medium; it used to be they were tied to months in the calendar, but that’s not universally true anymore; new issues just come out when they come out. People keep up by dealing with a local comic shop and setting up what’s called a “pull list.” You go in, fill out a form with some contact data, and make a list of the titles you want “pulled” for you when they come out. Then, you go to the store at your leisure to pick up your accumulated comics.

Big fans who read many titles do this every Wednesday, which is traditionally when new comics come out (you may have seen DC fans in your life talking about buying a highly-anticipated comic at midnight last night, i.e. on the first moments of Wednesday; it’s also been a plot point in Big Bang Theory more than once). People like me who read fewer titles probably go in once a month, or even every couple of months, but the principle is the same.

For years I had a pull list at Nan’s Comics and Games. I finally quit when, for the umpteenth time, they just skipped a couple of my issues. This is a “you had one job” kind of situation, right? If I have The Avengers on my list, I expect to get every issue. If I travel a bunch and don’t come in for 6 or 8 weeks, the point of the pull list is that I don’t miss anything. Nan’s couldn’t be bothered to actually do my pulls reliably, and so I just quit reading monthlies because it was too much trouble.

Back in early April, though, I was tempted back by some really great writing by folks like Kelly Sue DeConnick, Matt Fraction, and others, plus the Ta-Nehisi Coates-penned revival of Black Panther. Returning to Nan’s was out of the question, but there’s a much shinier and newer shop — a branch of Bedrock City, which has several locations in Houston — up on Washington Avenue. It’s actually next door to my doctor, even (which is convenient for him, since he’s a big nerd too).

I went in and picked 5 or 6 titles, and then filled out their pull-list paperwork. They had my name, my email, my physical address, and I even provided a credit card number after we discussed the fact that I might not drop by for a month or so at a time. “If we have your card, we’ll just charge you for them after a month or two, and nobody worries about it.” Fine by me! Let’s do this! I had the guy review the paperwork to ensure all was squared away — this turns out to be important — and left looking forward to my comics.

I got busy. I ride a lot, and work’s been crazy. I was driving by Bedrock today, though, and — fueled by the knowledge that Black Panther #2 had just come out; n.b. I bought the first one when I was in there in January or February, so this is what “monthly” looks like for some titles — I stopped by to pick up my comics. I was even a little excited!

The girl at the desk, though, killed that with a quickness. “We don’t have a pull list for Farmer,” she said after checking the computer.

WAT.

“I’m pretty sure you do.”

She poked around a bit, opened a drawer, and pulled my form out of some kind of dead letter file. On the front was a PostIt saying that, because they didn’t have my phone number, they couldn’t set up the pull.

Mind you, they reviewed my paperwork back in April, and pronounced it find. Plus, my email address was RIGHT THERE ON THE FORM, in the box marked “email,” so it’s not like they couldn’t reach me. Even if they were dead set on having the phone number for the pull (which the guy in January didn’t care about), you’d think they could’ve reached out by email to get the phone number. These are people who, presumably, also care about comics, and understand that missing issues isn’t cool.

Apparently not. By now, I’ve missed at least one issue of everything on my list other than Panther, which (as I said) just came out.

I bought that, and only that, and told them not to bother with the pull after all.

Fuck you, Bedrock. I’ll buy the rest of the Panther issues somewhere else when they come out. The other titles I’ll get digitally, or buy the trade paperbacks when they come out. From AMAZON.

Why are comics shops in trouble? Shit like this right here, boyo. Shit like this right here.

(For the record, the girl behind the counter seemed to understand this was bullshit, but made clear the decision to behave this way wasn’t hers.)

In the future, you can watch your home burn down from anywhere in the world!

A Fort McMurray family had a Canary system, apparently, and so they were able to watch their home burn down in real time on their phones. The fire comes in through the window at the left.

There’s audio. You can hear the glass breaking, and, eventually (but far too late), the smoke detectors going off around the time visibility becomes 0. The video continues after you have no more meaningful visuals, but given that audio continues the whole time I’m not sure if this is because the smoke was too thick, or because the video element perished ahead of the rest of the device.

In any case: Eeek.

My Macbook Pro is cool, but this is epic

Who else remembers the Twentieth Anniversary Macintosh, released to mark the company’s 20th birthday in 1997? (See also Wikipedia.)

N.B. that this computer is now 20 years old, but somehow manages not to look terribly dated. Amazing.

(More Mac nostalgia in this MeFi thread, if you’re into it.)

I feel kind bad for the robot.

In which I’m nerdy about keyboards

So I have a great keyboard, but it turns out one of the things that I really love about it — fantastic mechanical switches that feel really nice under my fingers — also makes it kind of loud, and even though I work at home, turns out I’m on the phone a LOT. And even though I use a headset most of the time, the Kinesis is clickyclacky enough that people ask me to mute ALL THE DAMN TIME.

Well, since the Kinesis — which was a gift from a pro bono client ten years ago, which shocks the hell out of me; who makes keyboards that last ten years? — really needs some TLC, I figured I’d try out one of the more well-reviewed other ergo keyboards in the interim: the Microsoft Sculpt.

Yeah, I know. But, turns out, lots of Mac nerds love it, and it’s cheap (or, at least, it’s cheap compared to the Kinesis, and so now I’m typing this on one.

It’s true: key feel is, while inferior to the mechanicals on the Kinesis, still far better than I’d expected. But it’s a different beast. All ergo keyboards have some idiosyncrasies; my Kinesis has for years been the functional equivalent of typing on an RPN calculator. Guests just can’t use it. But it’s not just the radical shape of the thing — even though the keys are essentially in a standard Qwerty layout, the modifiers are all over the place. I’ve been hitting enter with my right thumb for a decade.

Space is there, too. Backspace and delete are under your left thumb. Control, command, and alt are thumb keys on the Kinesis layout, too. That’s not all, either; the arrow keys are split between left and right (below the bottom row on the left) and up and down (same position on the right). To describe the Kinesis as eccentric is to understate things rather dramatically, but holy hell is it comfortable once you get the hang of it. (Obviously, all this assumes a pretty complete committement to touch-typing; the last time I checked, I was somewhere north of 90 words per minute.)

Microsoft’s entry here is almost quotidian compared to my Old Reliable. It’s still goofy compared to a flat keyboard, but it’s far closer to the norm than the Kinesis. It’s got a normal (but split) spacebar. The arrows are in an inverted T on the right, as you’d expect. The biggest shocks for me, in terms of adaptation, are doubtless to be

- The placement of control/command/option on either side of the space bar, which isn’t terribly comfortable, and is especially hostile to the motions I’ve learned on the Kinesis for Cmd-Tab and such; and

- The utterly baffling choice they’ve made with the number row.

Of the second point, let me explain. On the old keyboard the split was logical: 1-5 on the left and 6-0 on the right. This allows the keyboard to be used just as a traditional touch typist would, and it’s a critical thing.

Microsoft has, once again, gone its own way for no discernible reasons (and have apparently been doing this since their first “Natural” keyboard back in the 1990s). The numbers are unbalanced; the left hand handles 1 through 6, with 7-0 on the right. This might seem like a minor change, but as I noted it’s like nails on a chalkboard for anyone that actually knows how to type.

Plus, numbers aren’t the end of it — obviously, if the number row is shifted left, so too are the shifted versions of those keys; shift-8 is asterisk on most any keyboard, but now the eight and the asterisk are in the wrong spot. Plus the keys to the right of zero are now in the wrong spot, too (hyphen/underscore and plus/equals).

It’s a little thing, but it may well be enough to have me send this thing back. I can’t even fix it with remapping software, because there aren’t enough keys on the top row to shift things right (backspace is to the right of plus/equals).

The tl;dr here is that yeah, once again, I’ve run into a place where Microsoft subverts an agreed-upon standard for no good goddamn reason. What IS it with those guys?

No, YOU’RE a two-rotor wankel

Even people who aren’t gearheads tend to be at least provisionally aware that the Mazda RX-7 and RX-8 used a very different sort of engine than pretty much every other car. While the rest of the auto world had long since settled on a piston-and-cylinder design, the RX cars used something else: the Rotary or Wankel engine.

I knew this, too, and even had a vague idea how it actually worked — just a lot MORE vague than my understanding of conventional cylinder engines. However, if you’re curious, this excellent video runs down exactly how rotaries work by walking you through the actual parts involved (in this case, from a 1985 Rx-7). It’s pretty awesome.

You may have also noticed that, well, not only were the Mazda RX cars the only cars to use these things, but not even Mazda makes them anymore. Why is that? Well, fortunately, the same guy also made a video about why the rotary engine is dead. Again, I knew some of this, but not the underlying causes for them. Great, very accessible work here.

The tl;dr here, though, is kind of simple: because of the way the engine works, there’s an irregularly shaped combustion chamber, and that leads to inefficient combustion. A rotary is absolutely going to end up emitting unburnt fuel, which is terrible for fuel economy and terrible for emissions. The problem is exasperated by the fact that combustion only happens on one side of the chamber, which leads to enormous temperature differentials, which leads to problems in sealing, which means inefficient combustion, which means bad emissions and bad fuel economy again.

But watch the videos. He explains is really well.

Luke has a valid point.

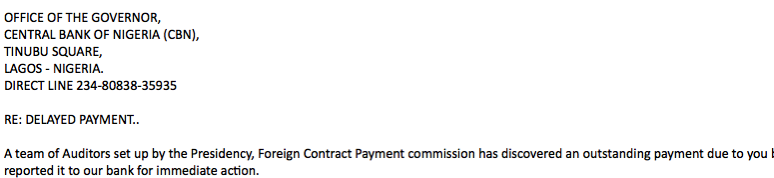

Wow! This is almost cute.

I just got an honest-to-goodness “Nigerian prince” spam:

And now, a pretty good lay description of Apple v. FBI

Also, its title is “Mr Fart’s Favorite Colors“, and how can you not read that?

More on FBI vs. Apple

Bruce Schneier has a nice rundown of recent events.

It’s becoming increasingly clear that the FBI has seriously overstepped here, even to lay people.

Here’s something interesting…

Well, to me, anyway.

You’ve all played pool on a coin-op table in a bar, obviously. Did you ever wonder how the table knows which ball is the cue ball? It has to be able to tell somehow, because after every scratch during play it returns the cue instead of trapping it with the object balls.

Turns out, there’s two ways.

One method involves a big-ass magnet and requires the cue contain enough iron for the magnet to pull it to one side and change its pathway inside the table. That’s pretty neat. (Click through; there’s video.)

The other method involves a cue ball that is fractionally bigger (2 and 3/8 inches vs the standard 2 and 1/4) than the object balls, and uses a slightly different mechanism to do the segregation that’s perhaps a bit easier to imagine since it’s size based.

This information has implications, though.

First, it means that home or premium table cue balls won’t work on coin-op tables, because in those sets (a) all the balls are the same size and (b) none of them contain iron.

Second, a bar owner who needs a new cue ball for his table will also need to know which kind of mechanism his table has, because there two types of cue balls and the iron one won’t work on a table that expects an off-size cue, and vice versa.

Interestingly, the first several billiard supply shops I found online didn’t even deal with coin-op cue balls; they were all about the home or premium market. I assume this is because a bar owner (or similar) is dealing with some local amusement vendor and not the Internet most of the time, whereas folks searching for “pool table supplies” online are looking to outfit their own billiard room.

No idea why this information charms me so much, but there it is. (Also: Magnets!)

Yet Another Bizarre Aspect of Win 10

In addition to all sorts of “phone home” behavior that is, apparently, not something you can disable, it turns out that Windows 10 will delete apps it thinks are outdated without asking first.

What. The. Actual. Fuck.