Someone has made a regexp crossword puzzle.

Category Archives: Geek

Dept. of Technological Anniversaries

Thirty years ago, on January 22, 1984, computing changed forever. The ad was a teaser; remember, it ran only once, but during the Super Bowl (Raiders 38, Redskins 9), so it’s safe to say lots of people saw it.

Jobs’ demonstration of the actual machine two days later made it clear that Apple was playing the game at a higher level than anybody else. Remember, at the time, the IBM PC was state of the art for personal computing: huge, bulky, unfriendly, and based on a command line interface. There was no sound beyond beeps and boops. Graphics were a joke on the PC, and required an add-on card. The GUI Jobs demonstrates here is, by comparison, from another planet. The technical information he outlines is similarly cutting edge, especially for a mass-market computer. To say this was an exciting development is to understate things by a couple orders of magnitude. The Mac changed personal computing in enormous and profound ways. Jobs’ examples of IBM missing the boat may seem grandiose, but he’s fundamentally right.

(Something else to keep in mind: in this video, Steve Jobs is twenty-nine years old.)

I didn’t join the Mac faithful right away — in ’84, I was in junior high. I made it through high school with a TRS-80, a cartridge-based word processor, and a cassette tape drive as my mass storage. (Bonus: without the cartridge in, the CoCo booted straight to BASIC.)

I went to college in 1988, but since my campus was more PC than Mac, I bought an AT clone that turned out to be the fastest machine in my whole dorm. That was kind of fun. It also turned out that computers made sense to me in ways that other people didn’t get, and so I stayed in the Windows world for a long time but for some very rewarding side trips largely because people were paying me to do so.

But I got there eventually, mostly because of how awful Windows became, especially on a laptop. In late 1999, I was traveling a lot, living out of a laptop, and writing lots of Office docs. Windows 98 on a laptop was a dumpster fire in terms of reliability — crashes were frequent, and the idea of putting your laptop to sleep was just a joke. Windows couldn’t handle it, so you were forever shutting down and rebooting. Then a friend of mine showed me his new G3 Powerbook. In the days before OS X, Macs were only a little less crashy than Windows, but it was enough to catch my eye. The functional sleep/wake cycle, a big beautiful screen, and a generally more sane operating environment closed the deal, and I made the switch in early 2000 to a 500Mhz G3 Powerbook.

What’s interesting now to me in retrospect is that I realized I’ve been on the Mac side for nearly half its life. I’ve used Macs way longer than I used PCs (1988 to 1999). I see no future in which I switch back. Had Apple not switched to a Unix-based OS, I’d probably have gone to Linux for professional reasons — and, honestly, desktop Linux would probably be a much better place. (Having a commercially supported Unix with professional-grade software written for it, running on premium hardware meant fewer people worked to make Linux on the desktop viable for normal humans.) Instead, Apple built OSX, and changed everything again.

Original Macs were sometimes derided by so-called “serious” computing people as good for design and graphics and whatnot, but not for “real” work; by shifting to the BSD-based OSX, Apple gave the Mac the kind of hardcore underpinnings that Windows could only dream out (and, really, still doesn’t have). The designers and creatives stayed, and a whole extra swath of web-native software people joined them as the Mac (and especially the Mac laptop) became the machine of choice for an entire generation of developers. That shift has been permanent; if you’re writing web code in Python or Ruby or PHP, you’re far more likely to be doing so on a Mac than on Windows simply because the Mac has so much more in common with your production servers than Windows does.

The end result is that the Mac platform is in better shape today, at 30 years old, than it’s ever been.

I tallied it up the other day. I’ve had five Macs as my personal machine, counting the G3 I bought back in ’99. I’ve bought two others for my household — a 2009 Mini that serves as my media server, and a 2012 11″ Macbook Air I bought Mrs Heathen last Christmas. Somewhat hilariously, in doing this tally, I realized that (a) I never owned an “iconic” square Mac like the one in the video above; and (b) four of my five Mac laptops have looked almost exactly the same: the 2003 Titanium Powerbook G4 (1Ghz, 512MB of RAM, and a 60GB hard drive — a very high end configuration at the time!) was one of the first of the “sleek silver metal” Mac laptops, and that style was carried over to the upgrade I bought in 2005, though by then they were made of Aluminum. In 2007, I made the jump to the Intel-based Macbook Pros; the bump in power was pretty huge, but the chassis was substantially the same.

My 2010 update didn’t look much different, and the only significant visual difference between the 2010 model and the one I bought last fall is that my new one doesn’t have an optical drive and is therefore slimmer.

Eleven years is a long time for a product to look pretty much the same, especially in computing, but I’ve yet to see anyone complain that the MacBook Pro looks dated. That’s what paying attention to design gets you. I suspect that, eventually, the Pro will get a more Air-like profile, but right now the power consumption and temperature issues mandate the more traditional shape.

Anyway, Apple has a minisite up about the anniversary. It’s fun. Visit.

Say what you want about the guy, but he was always looking ahead

Mac blog Daring Fireball points us to this story from a decade ago, about the Mac’s 20th anniversary.

Herein, Jobs says

Like, when you make a movie, you burn a DVD and you take it to your DVD player. Someday that could happen over AirPort, so you don’t have to burn a DVD — you can just watch it right off your computer on your television set.

Someday, maybe, sure.

How to tell if a vendor holds both you and their own employees in contempt

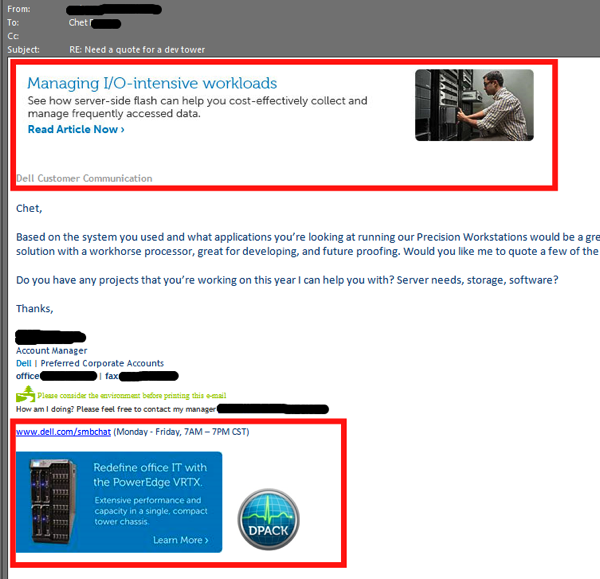

The following is a screenshot of a recent communication I had with Dell after I requested information about some new employee machines:

The areas in the red boxes are random advertisements inserted into every message this guy sends me. He can’t turn it off. I get different ones on every message.

That’s completely fucking bananas. What idiot marketing droid came up with this shit? Sweet Jesus, man, how did that ever survive the light of day? Think about it: every message sent by our actual REP includes spam.

Marketing people, man. I just can’t get past the fact that someone in Austin thought this was a good idea.

You can tell it’s bullshit because Forrester is involved

The New Deal is the latest mumbo-jumbo middle-manager fantasy about software.

Let me save you some time, and paraphrase what it says:

We have no idea how software gets written, but we have the vague sense that software is hard, and we know lots of buzzwords.

(h/t: Rob.)

SANTARAN CAROLS.

Commander Strax has an excellent Christmas message.

Microgravity Hangover

Turns out, if you spend enough time on ISS, you might need to unlearn some habits once you come home.

Today in Linguistic Tomfoolery

If Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo amuses you, well, here’s something in German.

(It’s only this trip through the Buffalo… page that I notice the assertion that any number of repetitions of the word “buffalo,” terminated with a period, is a grammatically valid English sentence.)

Dept. of Disappointing Milestones

As of today, it has been 41 years since a human walked on something other than the earth.

That’s ridiculous.

This is how I’m killing fire ants from now on.

First, though, I’m gonna need a really fucking hot gas burner.

“Waka waka bang splat…”

This came up in conversation lately; I was pleased to see it’s still floating around. I’m pretty sure the first time I saw it was via USENET on a mainframe in the late 1980s.

Deep Nerdery: Whatever happened to OS/2?

Ars Technica has a great history of IBM’s now-mostly-forgotten Windows competitor. Definitely worth a read if you’re a computing greybeard like me.

And now, painful satire for nerdy heathen

Introduction to Abject-Oriented Programming.

Sample:

Inheritance

Inheritance is a way to retain features of old code in newer code. The programmer derives from an existing function or block of code by making a copy of the code, then making changes to the copy. The derived code is often specialized by adding features not implemented in the original. In this way the old code is retained but the new code inherits from it.Programs that use inheritance are characterized by similar blocks of code with small differences appearing throughout the source. Another sign of inheritance is static members: variables and code that are not directly referenced or used, but serve to maintain a link to the original base or parent code.

Today in Minor Improvements

My super-goofy yet awesome keyboard has returned from its hospitalization rejuvenated and shockingly clean. It’s possible that they were able to build their own Wiggins out of the hair I’m sure they found inside it.

This caps a series of home/office logistical tasks I feel inordinately happy about sorting out, including:

- Something called “mudjacking”;

- Getting the retarded tablet fixed;

- Repairing the front door lock;

- Returning a client laptop to the client 8 months late;

- Acquiring a haircut;

- Getting an annual eye exam;

- Having AT&T hook up the goddamn cable; and

- Having AT&T come back out and fix the broken cable box 2 days after installation.

This is Goddamn HIlarious

Syncing your watch in 19th Century London

Obviously, your go-to source for the accurate time was, and remains, Big Ben — even if you can’t see it, you can HEAR it, right?

But what if you wanted to be as accurate as possible? Obviously, if you’re far from Ben, you’d hear the chimes later than someone quite close to it — with the speed of sound being about 1,126 feet per second, it matters.

Fortunately, there’s a map you can consult, with concentric rings showing the delay from “true” time.

Neat.

My new favorite insane thing on the Interwubs

At Hot Pepper Game Reviews, games are reviewed by presenters only after they’ve eaten a very, very hot pepper.

For example, here’s the review of the new Kingdom Hearts game after eating a habanero. The habanero is also the co-star of the Gunpoint review.

iPhones and AAPL prices

Apple is, at this point, sort of like Alabama. They’ve been so good for so long that the press in both cases just can’t wait for some imagined comeuppance, and so the new pattern we see after every Apple event is a litany of folks explaining how much the company has lost its way post-Jobs, and how it’s obviously drifting and leaderless, and how they’re completely over. Indeed, after the event yesterday, Apple shares fell 5%, and they remain significantly below their 52-week high of $705.

Well, if this is what “over” looks like, I’ll damn sure take it. Apple remains one of the most profitable companies in the world — in fact #2, behind Exxon Mobil, and the dollar gap is less than 10% despite the fact that Exxon has 2.5 times the revenue of Apple. They continue to sell just about as many phones, tablets, and laptops as they can make. As a consequence of being a money-printing machine, they’re also sitting on a cash mountain of about $147 billion.

And yet, as I write this, a single share of AAPL sells for about $470, which values the company at only about $427 billion. That’s still enough to make it the most valuable public company in the world (ahead of Exxon, Google, GE, etc.; its old rival from Redmond is waaaay down the list), but it strikes me as low.

Why? At this price, the firm’s P/E ratio is 11.7. That’s a rate that implies an over-the-hill firm in a mature market (e.g., it’s not far from Exxon’s P/E, or Microsoft’s). And note further that this ratio includes in the value of the firm all that cash, which (when factored in) would depress the P/E even further (to less than 8, if my math is right). That’s absurd in a world where Apple prints money at this rate. Google’s astoundingly less profitable, and its P/E is 26. Even at Apple’s lofty $700+ price per share last year, its P/E didn’t suggest it was too expensive.

I’m certainly no investment advisor. Make no mistake. It sure seems to me, though, that nitwits claiming Apple is over are riding backlash and not meaningful analysis.

Oh, damn: more Surface tension

Software Nerds Only

A quine is a program that prints its own source code. This sounds superficially easy, but it’s actually really not.

A quine relay is something else again, and now my brane hurts.

Dept. of Excellent Science & Video

Youtuber Smarter Every Day has a really, really fantastic piece up wherein he uses a super-high-speed camera to capture the act of firing a rifle underwater. The resulting discussion of the strange bubble pattern is really fascinating and accessible; check it out.

What happened with the Surface?

This analysis of the Surface debacle over at Microsoft is pretty astute. (Via Bill Shirley on Twitter.)

A Brief Summary of the Last Several Minutes

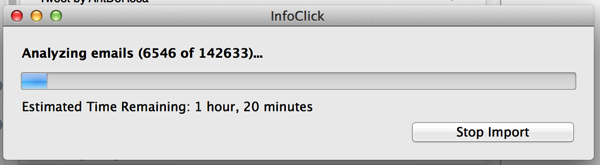

“Hey, I need to figure out how many times I’ve done [thing I do with email], so I need to gin up a fancy search.”

(Fiddles with native search tools. Curses.)

“Hey, didn’t I see something about a cool search utility for Apple Mail somewhere?”

(Checks local notes.)

“Yup, sure did! And there’s a free trial! Man, this is gonna be easy!”

(Downloads. Installs. Runs.)

“Oh. Right.”

(The sad thing is that this doesn’t represent even most of my mail; I don’t have anything like complete archives for stuff pre-1999/2000, which for me means 12-13 years are missing. On the other hand, the index will happen faster as a result.)

Effin’ Magnet Chains: How do they work?

This is completely awesome, and I obviously must have 50 meters of magnetized ball chain.

Update: I have been alerted that the chain was NOT magnetized; this’ll work with 50m of normal bead chain, apparently, which makes it even MORE awesome.

Today in interesting, short, nontraditional SF

This is a short SF story presented as a bug report to Twitter, regarding the failure of their API to limit result sets to tweets from the past.

You should go read it.

Today in HIGH Geekery, Microsoft tomfoolery division

In MSFT operating systems, it’s basically impossible to block Microsoft’s domains because they get treated specially and do not use the normal DNS system.

Whisky. Tango. Foxtrot.

You know what’s awesome about Outlook?

That every few months, it decides my .OST file is fucked, and that I need to delete it and re-download all my data.

All eight fucking gig.

Things that couldn’t possibly be a bad idea

Way back in 1994, when I moved to Houston and took a job at TeleCheck, I was absolutely shocked to discover that, in their machine room, there was still an honest-to-shit PDP-11 running RSX, alone in room full of Vaxes and Alphas.

“Good lord! Why?” I asked.

Well, it was complicated. TeleCheck used to be a loose confederation of state-by-state franchise operations, before one guy had bought up most of them (and, eventually, all of them). When they were still mostly franchises, though, a guy had had the idea to create a stand-alone computing services company to do the IT and programming for the franchises, plus some other clients as needed. He called the firm RealShare. (IT at TeleCheck was still known as RealShare well into the 1990s.)

By the early 1990s, nearly all the franchises (all except Australia, New Zealand, and two US states) plus RealShare were under one very leveraged roof held by a handful of execs (all of whom became hugely rich when FFMC bought TeleCheck in like ’92 or ’93, but never mind that). By that point, RealShare’s outside client list had dwindled to ONE: the vaguely-named MultiService Corporation of Kansas City. And MSC’s services ran on the PDP-11, and MSC wouldn’t pay to upgrade, so there they sat.

At the time, 19 years ago, it seemed obvious to me that this was a terrible idea, and that while they COULD stay there for years, they’d be left behind by the broader industry. In technology, holding on too long to older tech can become very, very expensive! Besides, by that point even the technologically conservative TeleCheck had moved on to Vaxes, and in fact was slowly migrating to the wave of the future that was Alpha. (Yeah, about that…)

I left TeleCheck in 1997, off to greener pastures. I assume that lone PDP has long since been powered down. After all, that was almost two decades ago.

Imagine my surprise, then, to discover today that there ARE PDP-11s still in use, and that the organization using them intends to keep them on line until 2050, and has taken to trolling through vintage computing forums to find talent to keep them running!

“Wow! That’s amazing, Chief Heathen! I assume, at least, that they’re not being used for anything IMPORTANT, like financial processing, right?”

Well, you’d be wrong. True, it’s not financial processing they’re doing. It’s nuclear plant automation in by GE Canada.

An obsolete system from a defunct company with effectively no user base and fewer knowledgeable developers and administrators? What could possibly go wrong?

Dept of Old News

There’s a pretty good video floating around concerning what would happen if Superman punched you at full force, but it turns out it’s basically exactly the same riff that Randall Munroe did in his very first What If a few months ago when he answered the question “What would happen if you tried to hit a baseball pitched at 90% of the speed of light?”

Answer in both cases: rough equivalent of a GIANT-ASS NUCLEAR BOMB. But given Munroe’s profile at this point, it seems kinda unlikely that the Superman-fist guy hadn’t seen Munroe’s work, which makes his effort seem cheesy.

I am entirely unclear as to why my desk does not have a Spock button.

In which we grouse about RSS readers

So Google Reader is going away in 20 days, which is troubling. I’ve been a big fan of it for a long time, but — like Gmail — I only use it as a back-end service. Just as I never log into Gmail to read mail, I never log into Reader to read sites. I use nice, native clients way cooler, nicer, and more fully featured than a web app. GR just provides the back-end sync.

For most of my life with GR, I used the Reeder app on my Mac, my phone, and my iPad. It’s really, really great. A while back, though, the Mac version developed a problem where it wasn’t able to sync with GR anymore. No idea why, or if I was the only one with the problem, and I got no support from the author, so I fell back to using the venerable NetNewsWire on the Mac (which can sync with GR) and kept using Reeder on my iOS devices. I do most of my reading on the iPad anyway.

Except now I have to change, and change nearly always sucks. Especially in this case, as it turns out that my use case is that of a power-user, and nobody wants to take my money.

Following the glowing coverage, I looked first at NewBlur, and was about ready to make the jump until I discovered something troubling: Apparently, NewsBlur quietly and automatically marks any item more than two weeks old as read, and there’s no way to change that. Hope you weren’t saving that! That’s a serious dealbreaker — I leave items unread all the time as ticklers for later action — but at least I discovered it before I signed up for an annual subscription. NewsBlur is also wasting time and money (from my point of view) building out a sharing-and-discovery featureset I find utterly uninteresting. I’m already on Facebook and Twitter, and I post here. I don’t need to have a dialog with other users in my feedreader, and I don’t need to “train” my reader to find sites for me. Just work the list I give you, and be done with it. I have American money. I’ll pay you.

Then I looked at Feedly, which is one of those high-concept things. The first troubling aspect is that it’s apparently free, and I’ve been burned on that before (and in fact I’m being burned by that RIGHT NOW). Secondly, the app is just a disaster of overdesign. Where Reeder is quiet, minimalist, and fast, Feedly is cumbersome and too pleased with itself by half — really, I just want the text. I don’t need you to reformat the stories into a facsimile of a magazine, for Christ’s sake. Feedly also appears to be just a browser, not a reader that grabs your subscription updates and presents them to you locally. This matters, because sometimes, I don’t have a network connection. Also troubling: Feedly is built to use Google Reader, and while they’re working quickly they still haven’t launched their in-house sync back-end. The end of the month could be a very messy time for them. No thanks.

Finally, I looked at Feedbin, which is probably the most promising option since it’s the one the Reeder author is working towards, and if he gets done I’ll be back with the right apps again. However, at present there’s no acceptable way for me to USE it — the Reeder author has only completed the Feedbin port for the iPhone version, which is my least-used client. Feedbin itself has a web interface, but it’s pretty crappy. The only iPad client is something called “Slow Feeds” that insists on sorting your subscriptions by update frequency, not by subject, which seems utterly useless. (The stated point of SF is to keep the rarely-updated feeds from being lost in your subscription list. This is a problem I never, ever have with Reeder, because its default mode is to show you ONLY feeds with new stories. This seems like a much better way to solve the problem.)

As of now, I’m assuming that Reeder for Mac and iPad won’t be ready in three weeks, and that I’ll be back to running NetNewsWire on my Mac (which can’t sync with anything but GR, but is still a workable stand-alone reader) and not reading news at all on my phone or iPad, at least until Reeder finishes with the ports.

Just like 2004. Yay! Giant steps backwards!

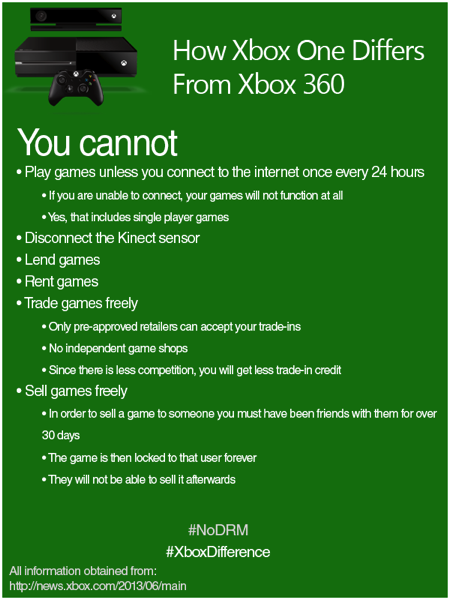

What You Need To Know About the Xbox One

From Twitter via Imgur via, probably, Reddit; no credit is obvious:

It must be connected to the Internet every 24 hours, or you can’t play at all. You cannot disconnect the Kinect sensor. You cannot lend, rent, sell, or trade games easily, because fuck you. It’s basically designed to destroy the used game market entirely.

How about no? Is no good for you, Microsoft?

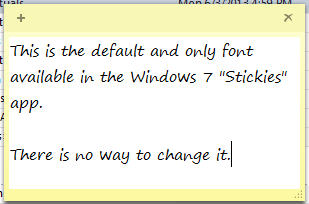

Exhibit Eleventy Billion in People v. Microsoft

Ahem:

“But Chief Heathen,” you must be wondering, “surely there’s a preference screen or something where you can change it to something sensible!”

You’d be wrong. Check it out. While it will, apparently, respect the formatting of text pasted into a sticky, the default is hardcoded and cannot be changed short of really goofy hacks.

WAT.

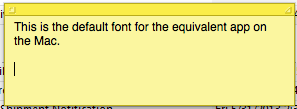

By contrast:

Moreover, you can create a new default at any time by formatting a note’s color, font, etc., any old way you like, and then choosing “Note -> Use as Default” from the menu bar.

BURNINATING THE COUNTRYSIDE. BURNINATING WESTEROS.

(From this Tumblr.)

Every programmer’s conversation with finance people, ever

Programmer: So we’ll see only record types A, B, and C, right?

Finance: Yes. That’s all.

Programmer: Never D? We have some D here.

Finance: Actually, yes. You need to do $special_thing with D records.

Programmer: Okay, so A, B, C, and D after all. That it.

Finance: That’s all. We promise.

(Weeks later)

Finance: Where are my E records?

It’s distressing to me the degree to which rigorous logical thinking is completely alien to corporate finance people. I am reminded of the wise words of my friend R., who said “Normal people don’t see exceptions to rules as a big deal, so they forget to mention them. This is why programmers drink so much.”

Best Video You’ll See Today

So, what happens when you wring out a washcloth in zero g?

As with many space-related things, it is completely awesome.

Now we need one for House Heathen

Dear Microsoft Project

You know, it’s nice and all that multistep logic is possible in a custom field calculation, but don’t you think it might be NICER to make it possible to debug something like this

IIf([Imported Actual Finish]<>projdatevalue("NA"),100,(IIf([Imported Actual Start]=projdatevalue("NA"),0,(IIf([Imported Expected Finish]=projdatevalue("NA"),0,(IIf([Imported Actual Start]>=[Imported Expected Finish],0,(IIf([Imported Remaining Duration]<0,0,((ProjDateDiff([Imported Actual Start],[Imported Expected Finish])*2/3)-[Imported Remaining Duration]*60*8)/((ProjDateDiff([Imported Actual Start],[Imported Expected Finish])*2/3))*100)))))))))

without resorting to external editors? I mean, just some basic paren matching would save an awful goddamn lot of time…

Mmmm, gears

If you connect enough 1:50 gears, you can run the first one at 200RPM and still encase the final one in concrete, as it will be trillions of years before it rotates even once.

SimCity: A study in EA hating you

Gamer-Heathen may have heard by now of the serious clusterfuck that is the new SimCity release. EA has decided that an always-on Internet connection is required to play this single player game that has no natural reason to connect to anything, and their servers got swamped on launch day. The predictable result was lots of folks with an $80 game who could not play.

At least one reviewer has pointed out the absurdity of this.

I, on the other hand, proceeded to Good Old Games and payed $5.99 for the last good version of SimCity, SimCity 2000. Go and do likewise.

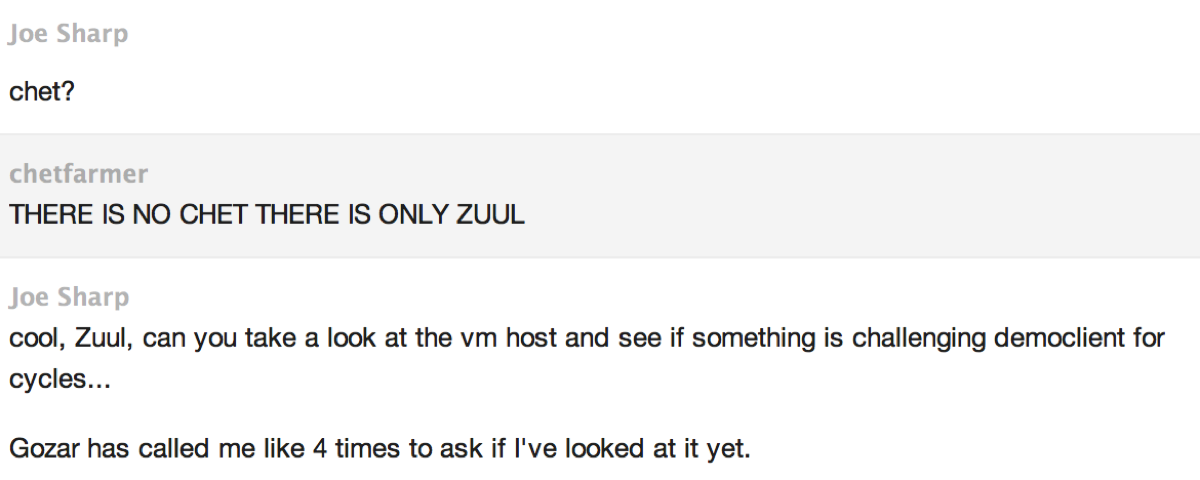

Life in IM

What could possible go wrong?

They’ve taught a giant four-legged robot called Big Dog to throw cinderblocks.

Today in Awesome Obsessiveness

This man has invented a machine to separate Oreos and remove the cream.

Because they hate you, that’s why

In years past, when you bought a piece of software, you owned a perpetual license. If your computer died, you could install it on your new computer without a hitch, because of of this license.

Microsoft has decided not to do it that way anymore; new versions of Office will now be bound to the machine they’re installed on, so that when you move to a new computer you are expected to buy another copy.

Fuck. That.

Go do this right now

From Twitter:

Even if you’re not a Unix junkie, open a Terminal and type this: traceroute 216.81.59.173 DNS tells a story… /via @gravax @AlecMuffett

Right now. Immediately. (On Windows, use the CMD prompt and “tracert” instead, I believe.)

This is. . . unnerving

Motion capture has long been a part of gaming and special effects, but the next-generation process used in the game LA Noire didn’t require weird reflective suits; instead, the cameras just captured the actors. This resulted in shockingly real motion in the game, obviously, but a by-product is an actual outtakes reel that is both fascinating and a little disturbing.

Bonus: Mad Men’s “Ken Cosgrove” is the lead character.

My kingdom for a better Twitter client!

Right, so, Twitter is fun. I like it. I enjoy the long-term async chat it gives me with my friends near and far, and I enjoy the amusement gained from following assorted famous people.

But.

Some of these famous people — whom I enjoy! — have a tendency to go a little bananas on the RT front, either with “real” retweets or quote-style retweets. Twitter itself will allow you to opt out of receiving a given user’s RTs at all, but this only catches the first kind; if some likes to RT posts with commentary attached, it’s not a RT by Twitter’s definition and it gets through.

The other aspect of the “disable RT” feature that makes it unsatisfactory is that it’s all or nothing, when in fact I like getting the occasional RT of some clever bit shared by someone, or the signal boost of a good cause that someone like Wheaton or Gaiman can provide.

My dream Twitter client is one that allows me to set a threshold for each person I follow (or overall; I won’t get greedy) such that I only see X tweets over Y minutes period, resetting only after the user has been quiet for Z minutes. That way, I’d get the one-off RTs and signal boosts, but I’d be spared the I MUST SHARE EVERYTHING explosions that some people (God love ’em) have been prone to.

Granted, no one is going to write this, let alone now. Something I’d settle for, though, would be the ability to apply new mutes, unfollows, or RT-blocks to the list of tweets already downloaded. That alone would salvage the experience once someone goes RT-happy.

Dept. of things that COULD NOT BE MORE AWESOME

It’s about time to check in the Slingshot Channel again

This time, Jeorg Sprave has made a slingshot capable of firing chainshaws.

As it turns out, simplicity is HARD

Inspired by the Up Goer Five XKCD cartoon, Ten Hundred Words of Science invites you to review similarly constrained job descriptions, or even contribute your own. It’s harder than it looks.

My own attempt:

On very big jobs like power places or space cars or flying cars , people have a hard time telling how much is done, or how much money it is going to take, before they are finished. They might spend too much money, or take too long, without knowing first, and that makes the people with money mad or sad. This is very bad because of how much money and time these big jobs take.

There is a way to tell, and people have to use it or be in bad trouble, but doing it right is very hard and takes lots of time and hard work. This makes the worker people have to work very very hard, because the big job is hard work already.

We make computers do some of the hard part better than the old way, and better than the old computers, but it is still hard. Computers need help from people. We work with the worker people and ask questions to help them tell the computer how to do the hard thing. People have to work with each other and computers to get the answers, and they have to do it every week or month to keep getting money for the big job.

Then the people who give the money and want the space car or power place or air car come and look at the answers, and say if the big job is doing good.

(Via MeFi.)