John Siracusa breaks down why the software in your TV blows chunks.

Category Archives: Geek

HOLY CRAP this is an actual bug

The Brazilian Treehopper is the goofiest weirdest thing you’ll see today.

Dept. of HOLY CRAP THAT’S AWESOME

If you have Chrome on your computer, you should immediately check this out. However, as noted in the disclaimer, please do not attempt to use this simulation for interstellar navigation.

(If you’re anything like me, this sort of reminds of you this famous short film.)

TRUE HEAVY METAL

These guys are WAY more metal than your favorite band. Seriously.

Because they’re robots. No, seriously. Robots. The drummer has four arms. The guitarist has 78 fingers.

(Also, you have no idea how many Terminator themed headlines I avoided in writing this post.)

So, what IS Twitter for?

This is the best thing to ever happen on Twitter, period. Seriously. Check it out.

Yet another reason to want to be Lord British when you grow up

Buried in this rundown of Richard Garriott’s Mayan Apocalypse party is this delightful paragraph:

Garriott, 51, who made his first million after developing the MMORPG (massively multiplayer online role-playing game) “Ultima” while in his early twenties, has become known for his ostentatious and theatrical gatherings that give his guests a chance to visit a live version of his virual worlds. His first big parties date back to the late eighties when he began hosting elaborate haunted houses. Perhaps his boldest bash was his Titanic-themed party in 1998—he decorated a barge as the doomed ship, loaded it with VIPs in tuxedos and ball gowns, and then made the vessel sink in Lake Austin, forcing his guests, including the then-mayor of Austin, Kirk Watson, to swim to shore.

That man knows how to live.

This Christmas, in Deep Nerdland

If you happen to be the guy who dates this person, you’ll come to understand that her father is kind of a big deal in geek circles, and (we suppose) if you’re very, very good, you might get the most awesome nerd Christmas present EVER.

All I wanted for Christmas. In 1983.

Feast your eyes, nerdy Heathen, on the 1983 Radio Shack TRS-80 Catalog.

(Via BoingBoing.)

BEST LAMP EVER. Also, BEST ROBOT EVER.

Go check out Pinokio, which is, astonishingly, a student project.

Maybe it’s time to axe that WOW subscription

Penny Arcade nails it.

“They’re Endless-ly Delicious!”

So back in the 1970s and 1970s, Hostess ran one-age ads in comic books that starred popular heros who invariably endorsed the cupcakes or whatever as part of a quickie bit of do-gooding.

Sandman was mostly later, but that didn’t stop some enterprising soul from making their own Endless Hostess ad.

Today’s entry in BEST TUMBLR EVER

Dave Cockrum 70s is just what it says on the tin: a collection of Marvel covers by Cockrum in the era of broad collars and flared shoulders. I had no small number of these as a kid.

(Via MeFi.)

Because ferrofluid is awesome, that’s why

You should stop what you’re doing and go watch this really cool video.

Up Goer Five

All my nerd Heathen have already seen this, but the rest of you probably haven’t: XKCD explains the Saturn V rocket using only only the 1,000 most commonly used words. Example:

This end should point toward the ground if you want to go to space. If it starts pointing toward space you are having a bad problem and you will not go to space today.

“They started to worry about the next Supreme Court Justices while they coded.”

You’ve probably heard about the GOP’s tech meltdown with “Orca.”

Now go read about how much ass the Obama campaign’s tech kicked. (Long, but worth it.)

Bonus: blog post from the campaign’s goofy-haired CTO.

If you like numbers and cipherin’

Then you might want to check out this video on Graham’s Number. It’s big.

Via Mefi.

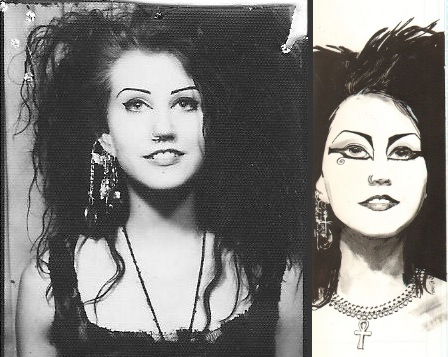

Who Death Was, and Is

On the left, an actual photo, from 1989, of a woman named Cinamon Hadley. On the right, Mike Dringenberg‘s drawing of Death, whom he co-created with Neil Gaiman.

That the former was the inspiration and model for the latter is not a matter of conjecture. Dringenberg apparently drew her often. Even Neil says so.

Now in her earlier forties — just like the rest of us — she’s returned to Salt Lake City, but I was surprised to learn that, for a while, she lived here in Houston. Weird.

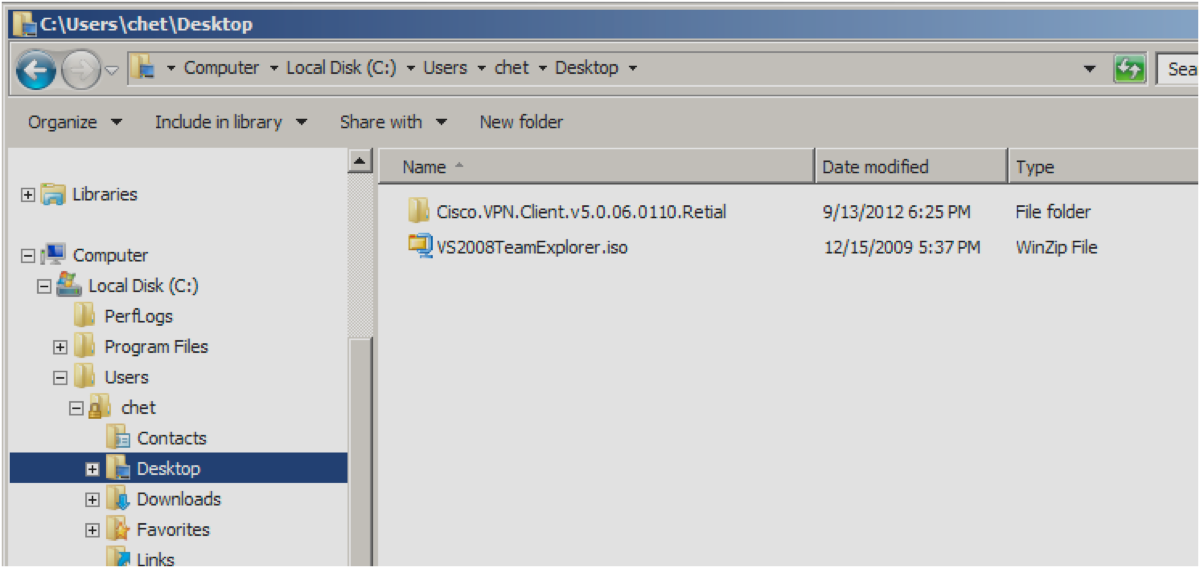

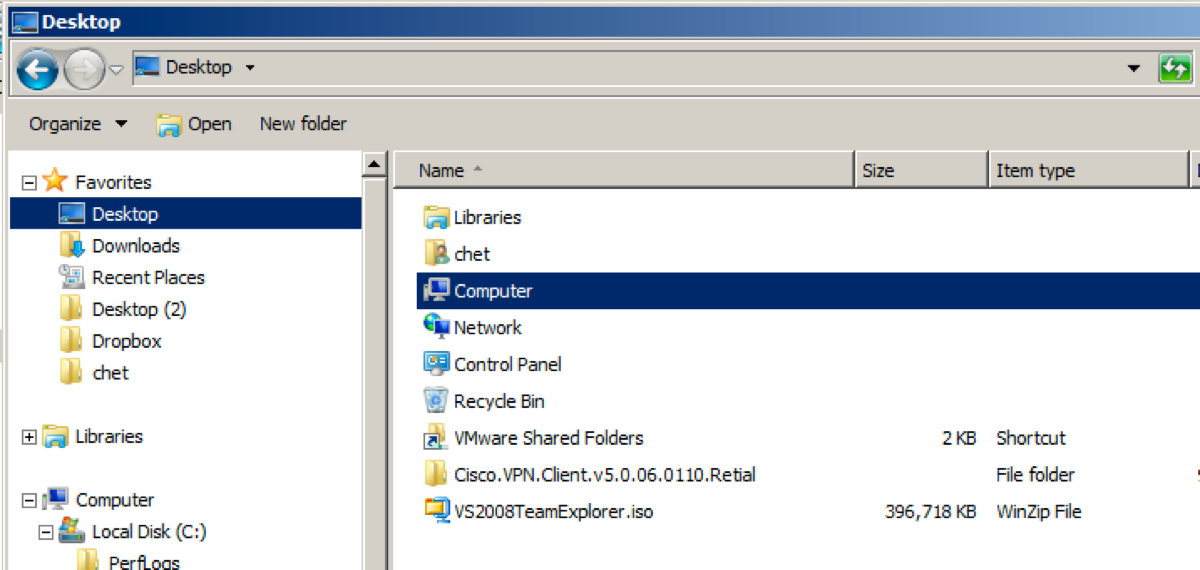

Oh, Windows. Will you never stop being stupid and weird?

I just realized something kind of breathtaking in its awesome boneheadedness.

The “Desktop” folder in Windows has always been a troublespot for them, conceptually. They do their level best to convince you that the Desktop is actually the “top” of your whole computing tree, but that’s materially not true; actually, the “desktop” is just a folder in your user directory. Drill far into down, and you’ll find that the “desktop” Windows Explorer shows you at the top will contain, inside a nest of other folders, itself.

Yeah, turtles all the way down. I’m sure that’s NEVER confused anyone.

Well anyway, I work off the desktop, mostly, in my Windows VM. I drop working files there, so I interact with it quite often. I keep little in the VM long-term, so I don’t want things getting filed away in My Documents or whatever where out of sight becomes out of mind.

It turns out that if you drill down to the desktop normally — usually, by choosing “Computer” and then “C:” and then “users”, then your username, and then “desktop” — you see pretty much the correct contents:

But if you make the Desktop folder a favorite by dragging it to the navigation bar in Windows Explorer, it apparently becomes the MAGIC DESKTOP, and Microsoft helpfully adds a bunch of other clutter:

Look! Extra shit in one view that’s missing in the other — in what should be exactly the same view. Nice.

There does not, by the way, appear to be a way to:

a. Remove these stupid extra links; or

b. Create a “favorite” link to my desktop folder that doesn’t include them.

And they wonder why people hate Windows.

There’s a thing in computing called “the principle of least surprise.” It’s the idea that, when you’re building a system, you don’t want to shock the user with unexpected behavior. This is an excellent example of a violation of that rule, and of the kind of bullshit that happens when you design by committee.

I don’t understand why we don’t do this all the time

Liquid nitrogen + warm water + 1,500 ping-pong balls == AWESOME.

You really need to visit Google’s homepage today

Seriously. It’s interactive.

Cosplay: Now over

Because, seriously, what could top (or out-creep) this Bert and Ernie pair?

io9 Says This Is The Best Godzilla Photo EVER

The Absolute Worse Thing About Late 20th Century Comics

No, seriously.

For extra added bonus fun, someone has created a rendering of what the cinematic version of Captain America would look like if he were built like Liefeld’s version.

Dept. of Excellence Elsewhere

This great post and thread at MetaFilter covers my early computing life rather thoroughly.

Apple had effectively no presence in south Mississippi in the early 1980s, but Radio Shack was there. My friend Rob had a no-shit TRS-80 Model 1; my friend Paul got a TRS-80 Color Computer soon after. Eventually, I got a Color Computer II, which was the first machine of my own that I wrote code for — before this, I’d written some BASIC on other oddball micros over at USM.

That CoCoII — which I think is in a closet here in Houston as I type this — had no disk drive. Instead, I stored programs and files on a cassette drive, which was WAY cheaper. And, of course way more prone to failure. Interestingly, the word processor I used all through high school was cartridge-based, like an Atari game, which had at least one advantage over floppy-based programs in that the cartridge bus was many times faster. I didn’t realize this was a Thing until later, when I was first using a dual-floppy PC at the high school and couldn’t figure out why the word processor took so long to change between modes…

I left the tiny computer world in 1988, when I bought an AT clone for college, but parts of my nerdy heart will forever belong to Tandy and their computing family, first introduced now 35 years ago. Ouch.

(Oh, and I still have one of these somewhere. I took notes on it in college. Back then, laptops were prohibitively fiddly and heavy, but this little bastard ran for weeks on AAs. I’d transfer the files to my desktop with a null modem cable, since back then there was no wifi and there were no SD cards.)

Oh, I have these scars

The Enterprise IT Adoption Cycle pithily and accurately captures something I fight daily: how utterly hidebound and obstructionist most big-company IT is.

Seen on Reddit

Take that, Olympics.

Note to self: Do not swim in this area

This slow-motion, high-definition footage of great white sharks breaching to snap up seal decoys is pretty amazing. Giant animals, completely out of the water. Eek.

Also, it appears that some of these scientists may need a bigger boat.

“Seven Minutes of Terror”

You may have heard that we’re sending another probe to Mars.

Once it hits the Martian atmosphere, it takes about 7 minutes for it to reach the surface.

Mars is far enough away, though, that messages from the lander take 14 minutes. Ergo, by the time we hear it’s hit the atmosphere, it’s either safe on the ground or a pile of junk.

How they plan to manage this is a pretty interesting story. Make time for this today. (Here’s a permalink if you miss it today.)

The Curiosity rover will touch down — or crash horribly — on Monday, August 6, at 12:31 AM.

h/t: The Mant.

I have never before wished AT ALL that I lived in Kansas

Google is starting its fiber rollout; the initial neighborhoods in Kansas City will have the option of a ONE GIGABIT CONNECTION — both ways.

Existing telcos in whatever markets Google identifies are, I’m sure, absolutely shitting their pants. And should be.

It’s possible some of you aren’t nerdy enough to realize how big a deal this is. When I say “a gigabit connection,” I mean a connection that delivers 1 gigabit of bandwith per second. A gigabit is 1,000 megabits. A megabit is 1,000 kilobits.

Initial DSL connections were usually in the 400 to 600 kilobit range for download speeds. Now it’s usually 1 to 3 megabit download and about 0.7 megabit upload, unless you pay extra. Here at Heathen Central, we buy the fattest pipe UVerse will sell us, which is theoretically 18 megabits down and 3 up. In practice, it’s more like 12 to 15 down and 2 up, but that’s normal.

1,000 megabit both ways? Shit yes. Sign me. ATT can fuck off as soon as I get my hands on that, for sure. I mean, Google may be trending towards evil, but AT&T is the goddamn phone company. How much more evil can you be?

Of course he did

Back in the 60s, apparently, Jack Kirby did some theater costume designs for UC Santa Cruz. For a Shakespeare play.

Awesome.

Read it just for the phrase “plumes of hot meat”

Randall Munroe’s What If? tackles the question of “What would happen if you gathered a mole of moles?”

Somehow, I missed this AWESOME FACT

In this great behind-the-scenes short from the Hobbit, I discovered that Sylvester McCoy is in the film as Radagast, another of the wizards, i.e. a peer of Gandalf.

McCoy is of course already immortal in fandom as the 7th incarnation of the Doctor back in the 80s; his tenure — the 24th, 25th, and 26th seasons — closed out the original TV series, though he went on to do a TV movie in 1996.

(Giant Nerd Alert: Note that, in Tolkein, wizards are not human beings. They’re closer to angels or demigods.)

Game of Hipsters

I suddenly feel MUCH better about how much crap is in my backpack

Think I overpack? Go check out Woz.

ZOMG

Ever wonder what money is, really?

Yeah, me too. This Reddit post actually does a pretty bang-up job of explaining how barter, currency-as-marker-for-something-else, and modern fiat money all work.

Photos Of That Other Space Program

No, not the private sector one. The one that beat us to orbit.

The monument pictured is much more striking in person, which is sort of amazing given how cool the pictures are. I saw it in 1991.

I’m not sure if I need the shirt, but the sentiment is absolutely true

Well, shit.

Salon is looking to sell the Well, and has apparently already laid off its staff.

That’s been my online home for a really long time. Dialog there is better than on 99% of the web. It’s never been anonymous, which is probably one reason why. Before there was Facebook or social media, the Well was having gatherings and parties and picnics — like, in the 1980s.

With fewer than 2,700 subscribers left, I wonder how long it’ll even exist now — I mean, short of some deep-pocketed angel coming along to save it for the sake of saving it.

Today in Nerd Excellence

There is nothing not awesome about a slinky on a treadmill.

Coolest Wedding Ring EVER.

This one guy made his own out of a meteorite.

Best Shot Glass EVAR

Watch it being made. On a lathe. From candy.

It’s sort of a companion to the existentialist social networking post

Introducing Objectivist C. (via Maud Newton on Twitter.)

“You were born, and your inheritance was pain. Make sure you are connected to the Internet.”

Social media for existentialists.

“Your friends list is empty. It will always be empty.”

(Via @lemay on Twitter.)

Dept. of GAAAH

Io9 points out that a child’s skull is actually pretty terrifying.

How much do you know about Tesla?

Are You Killing The Internet?

Who wants to play?

I just signed up on the back-order list for Cards Against Humanity, and have every intention of doing the “DIY Set” ASAP in the meantime.

Dear Atwood: Shut Up.

I’m not sure any post here has ever needed an epigram, but this one does:

Normal people don’t see exceptions to rules as a big deal, so they forget to mention them. This is why programmers drink so much. — Rob Norris

Rob is one of my oldest friends. I’ve known him for 30 years at least. He’s also a programmer by trade. This quote — which I pulled from a conversation we had about customers and requirements and the difficulty in building the thing the client needs, but doesn’t know how to ask for — could have come from any of a hundred or more conversations he and I have had about the issue over the years, or a thousand or more conversations I’ve had with other colleagues about precisely the same issue.

More than anything else, this is the crux of the thing that programmers and other software development professionals complain about when they go to lunch or happy hours. Not coincidentally, it’s probably also the basis for what noncoders complain about when they talk about programmers. It’s the key issue that separates a coder of any level from the rest of the planet: the ability to understand what the actual rules are, and why exceptions matter. You can’t get to the next level of technical literacy — which things are hard and which things are easy — without this. Taken together, a customer who understands enough about these two basic computing truths is (first) a way better customer to work with. There will be much less drinking! And second, he’s going to be a much happier customer, because communication with him will be easier. But without either, you almost can’t communicate at all.

In my entire 20+ year career of managing projects and writing code, I’ve had maybe one or two customers who actually understood these points. This is because, to a first approximation, nearly everyone outside the programming trade is technologically illiterate when it comes to software development. They don’t get it, and they have never tried to understand it at all. Their computers are mysteries to them. I’ve seen very, very smart people flail utterly when Excel did something they didn’t understand, and you have, too. This failure is because, in part, the machine is a mystery, and they have no idea how the underlying parts work.

So now, this year, there’s a movement called CodeYear whose goal is to teach anyone who’s curious the basics of programming. This is a GREAT idea. It’s not about making everyone a programmer, and it’s certainly not about trying to recruit more professionals. A year isn’t going to make anyone a wunderkind, or really even hirable most likely. What a year of spare time development tutorials will do, though, is make you more conversant in the concepts that, every day, drive an increasingly large proportion of the world around you.

Damn, you must be thinking, that’s a good idea! And it is. It’s a very, very good idea. Spreading understanding — about anything — is never a bad plan.

Unless, apparently, you’re Jeff Atwood over at Coding Horror, who has missed the point of this exercise so much that I really think he might be trolling. Atwood has been, up to now, a fairly well regarded development blogger. I read him from time to time. But he could NOT be more off base here. He comes off as a weird tech-priesthood elitist, and totally ignores the very basic points I note above.

In his misbegotten essay, he even suggests that swapping “coding” with “plumbing” shows everyone how ridiculous the idea is — except, well, normal people already understand way more about plumbing than they do about programming, and a good chunk of them can fix a leaky faucet or clear out a sink trap on their own. Not only is it a good idea for folks to learn a bit about plumbing, in other words, it’s already happened — and there’s entire libraries worth of DIY books to help you expand that knowledge as a layperson. Atwood is too in love with his metaphor to notice, I guess.

He runs on to whine that the effort will result in more bad code in the world, while “real” programmers strive to write at little as possible. While true, this is like saying children shouldn’t learn the basics of grammar because it’ll interfere with the work of poets.

Like I said, it’s almost like he’s trolling, and maybe he is. I’m sure Coding Horror is getting a massive influx of traffic because of the (nearly universal) condemnation of his reactionary rant. It’s more likely, though, that his tantrum is more due to the sort of knee-jerk contrarianism that runs through a great chunk of the technical world (why else do you think so many developers worship Ron Paul?), but that’s not really an excuse.

My hope is that most people will ignore Atwood’s rant, and instead avail themselves of the excellent resources that CodeAcademy has made available for this project. They are, I should note, free.

Finally, I’ll just offer this:

There’s a special place in hell for people who believe that working with technology is an unteachable priesthood.

— Jeffrey McManus (@jeffreymcmanus) May 15, 2012

Exactly. FWIW, Jeffrey also shared a couple more links on Twitter that are worth your time if this dialog interests you:

- Learning to code is a valuable life skill for everyone

- The Coding World View, which includes this excellent quote: “Learning to program is like literacy, it’s a new way to learn and engage with the world, and it’s one well-worth doing.”

Read ’em both.